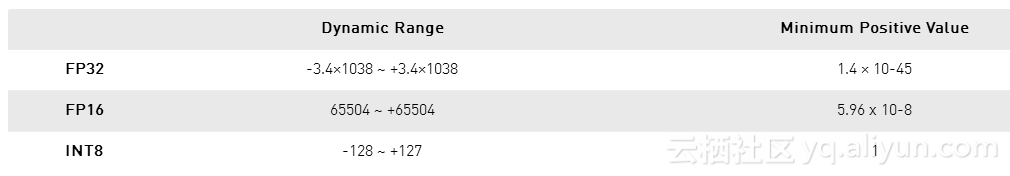

Finally, we provide a detailed performance analysis of all precision levels on a large number of hardware microarchitectures and show that significant speedup is achieved with mixed FP32/16-bit. And with support for bfloat16, INT8, and INT4, these third-generation Tensor Cores create incredibly versatile accelerators for both AI training. We find that the difference in accuracy between FP64 and FP32 is negligible in almost all cases, and that for a large number of cases even 16-bit is sufficient. The NVIDIA Ampere architecture Tensor Cores build upon prior innovations by bringing new precisionsTF32 and FP64to accelerate and simplify AI adoption and extend the power of Tensor Cores to HPC. We then carry out an in-depth characterization of LBM accuracy for six different test systems with increasing complexity: Poiseuille flow, Taylor-Green vortices, Karman vortex streets, lid-driven cavity, a microcapsule in shear flow (utilizing the immersed-boundary method) and finally the impact of a raindrop (based on a Volume-of-Fluid approach). In a training process of a neural network. Based on this observation, we develop novel 16-bit formats – based on a modified IEEE-754 and on a modified Posit standard – that are specifically tailored to the needs of the LBM. Using cuBLAS we can benefit from Volta and tensor cores: FP32: FP16 input, FP32. Floating-point data types include double-precision (FP64), single-precision (FP32), and half-precision (FP16). For this, we first show that the commonly occurring number range in the LBM is a lot smaller than the FP16 number range. FP16 can result in better performance where half-precision is enough.

Here, we evaluate the possibility to use even FP16 and Posit16 (half) precision for storing fluid populations, while still carrying arithmetic operations in FP32. Half-precision floating point numbers (FP16) have a smaller range. 96 as fast as the Titan V with FP32, 3 faster. 35 faster than the 2080 with FP32, 47 faster with FP16, and 25 more costly. Always using FP64 would be ideal, but it is just too slow. Sadly, even FP32 is 'too small' and sometimes FP64 is used. Re: FP16, VS INT8 VS INT4 If FH could use FP16, Int8 or Int4, it would indeed speed up the simulation. 37 faster than the 1080 Ti with FP32, 62 faster with FP16, and 25 more costly. About six i5 at home, including a GTX 1050ti GPU that does half the total points. For single-GPU training, the RTX 2080 Ti will be.

Alongside reduction in memory footprint, significant performance benefits can be achieved by using FP32 (single) precision compared to FP64 (double) precision, especially on GPUs. performs both FP16 and FP32 operations while the unit that performs double precision floating (FP64) point operations as the DPU. As of February 8, 2019, the NVIDIA RTX 2080 Ti is the best GPU for deep learning. Fluid dynamics simulations with the lattice Boltzmann method (LBM) are very memory-intensive.

0 kommentar(er)

0 kommentar(er)